Journal of Bionic Engineering (2025) 22:1557–1572 https://doi.org/10.1007/s42235-025-00688-7

RFLE-Net: Refined Feature Extraction and Low-Loss Feature Fusion Method in Semantic Segmentation of Medical Images

Fan Zhang1 · Zihao Zhang2,3,4 · Huifang Hou4 · Yale Yang1 · Kangzhan Xie1 · Chao Fan4 · Xiaozhen Ren4 · Quan Pan5

1 School of Information Science and Engineering, Henan University of Technology, Zhengzhou 450001, China

2 Key Laboratory of Grain Information Processing and Control (Henan University of Technology), Ministry of Education, Henan University of Technology, 450001 Zhengzhou, China

3 Henan Key Laboratory of Grain Photoelectric Detection and Control, Henan University of Technology, Zhengzhou 450001, China

4 School of Artificial Intelligence and Big Data, Henan University of Technology, Zhengzhou 450001, China

5 School of Automation, Northwestern Polytechnical University, 710000 Xi’an, China

Abstract

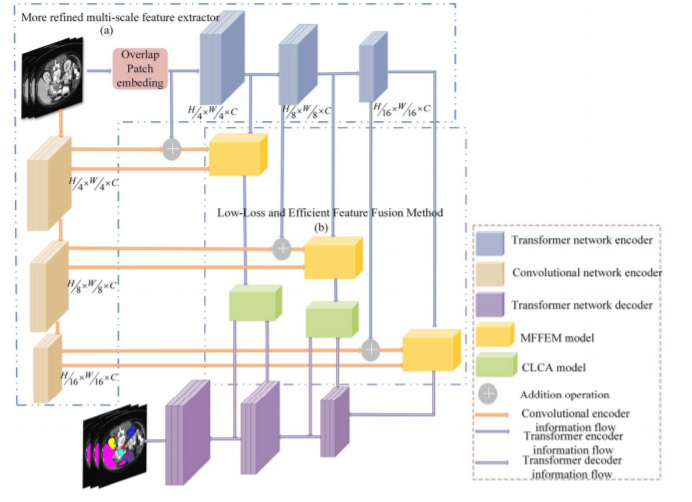

The application of transformer networks and feature fusion models in medical image segmentation has aroused considerable attention within the academic circle. Nevertheless, two main obstacles persist: (1) the restrictions of the Transformer network in dealing with locally detailed features, and (2) the considerable loss of feature information in current feature fusion modules. To solve these issues, this study initially presents a refined feature extraction approach, employing a double-branch feature extraction network to capture complex multi-scale local and global information from images. Subsequently, we proposed a low-loss feature fusion method-Multi-branch Feature Fusion Enhancement Module (MFFEM), which realizes effective feature fusion with minimal loss. Simultaneously, the cross-layer cross-attention fusion module (CLCA) is adopted to further achieve adequate feature fusion by enhancing the interaction between encoders and decoders of various scales. Finally, the feasibility of our method was verified using the Synapse and ACDC datasets, demonstrating its competitiveness. The average DSC (%) was 83.62 and 91.99 respectively, and the average HD95 (mm) was reduced to 19.55 and 1.15 respectively.

Keywords Multi-organ medical image segmentation · Fine-grained dual branch feature extractor · Low-Loss feature fusion module

Copyright © 2025 International Society of Bionic Engineering All Rights Reserved

吉ICP备11002416号-1